CDN vs P2P: Centralization versus Decentralization, the Steps Toward Web3

The rise of digital platforms and the growing demand for live streaming and video on demand (VOD) have sparked a profound technological discussion that questions the fundamentals of how we distribute and consume content online. Nowadays, it’s not uncommon to hear conversations about the decentralization of CDNs, Edge Computing, P2P, and Web3—all mixed with the concrete goal of improving the user experience and preparing the ground for the arrival of new technologies that are even more demanding in terms of delivery and processing, with ever-increasing volumes of information.

This article aims to shed some light on this discussion, to understand what’s coming, and how each technology has its strengths and weaknesses. We will focus on the CDN as the standard-bearer of centralization and on P2P as its antithesis and the spokesperson for total decentralization.

INDEX

CDN: Centralized Distribution

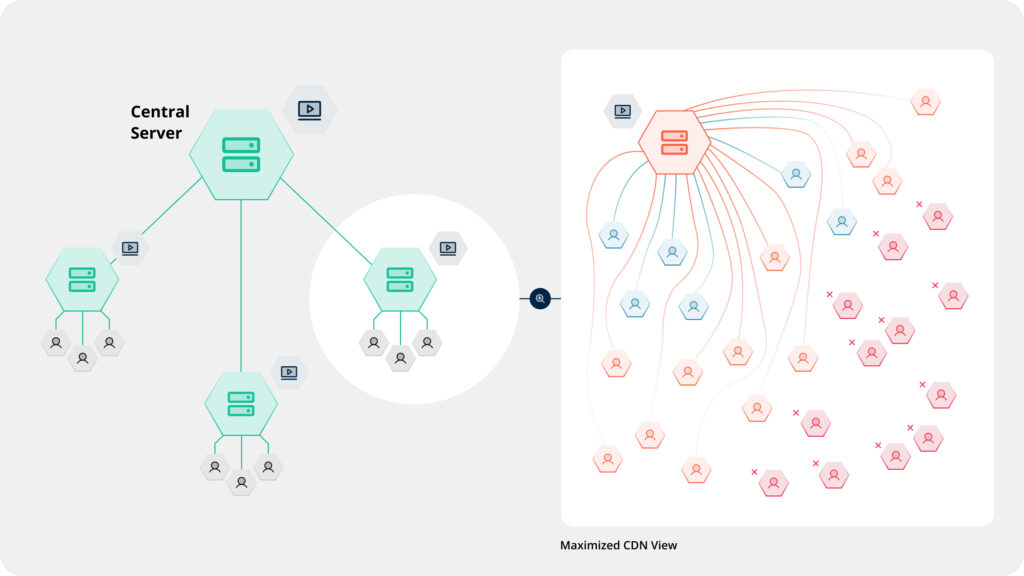

CDNs (Content Delivery Networks) work by placing servers strategically in multiple geographic locations to ensure that users access content quickly. Unlike the fully centralized servers of the past, this evolution seeks to distribute the load among different servers to reduce latency and improve user experience by bringing the content closer to the end access point. Additionally, CDNs offer security benefits, such as protection against DDoS attacks, and their ability to replicate content ensures that there are no interruptions even if a server fails.

Content providers rely on CDN services as a daily tool to ensure that the job gets done. However, like everything, it’s not infallible. The massive spikes in video consumption we’ve experienced in recent times, especially since the COVID-19 pandemic, have shown that any CDN can be overloaded. These servers also have a limit, and the wave of OTT (Over-The-Top) platforms has demonstrated that they have the power to test these giants, with massive sporting events being the most well-known example.

P2P: Decentralized Distribution

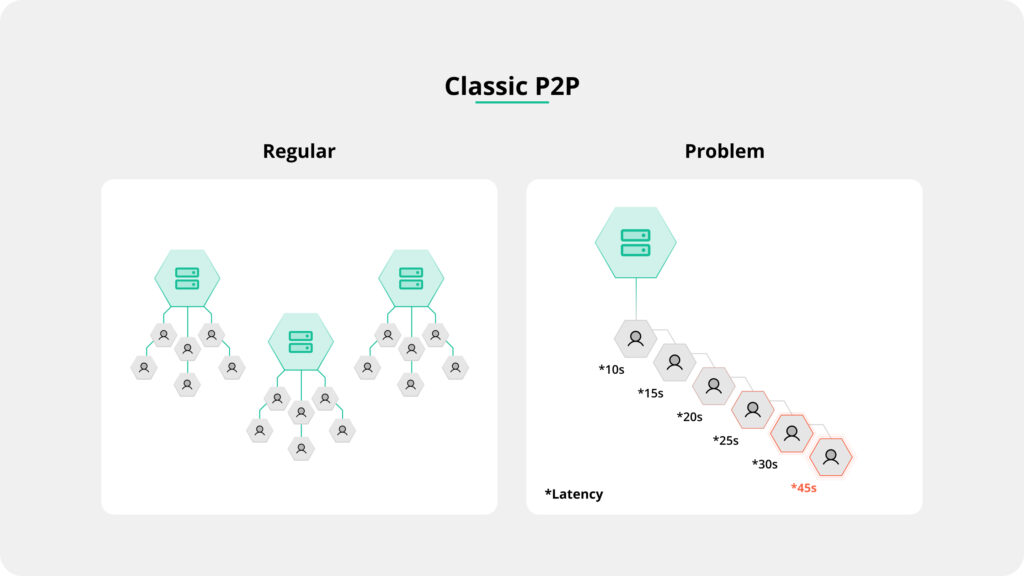

P2P (Peer-to-Peer) leverages the users’ ability to distribute content directly among themselves. Starting from an initial connection from a server, this technology allows users who are watching or downloading the same content to connect. The P2P system can offload part of the burden from the central servers, reducing costs and allowing rapid scaling in high-concurrency events.

Although not a new technology, P2P has historically had attempts to function massively and professionally but has also faced drawbacks that have prevented its widespread adoption. Issues like latency, low quality, service interruptions when one or more peers drop out, and the abuse of private networks’ bandwidth are some of the most well-known challenges.

Classic Challenges of P2P

The traditional P2P model has faced significant challenges that have limited its mass adoption. Among the most notable are:

ISP Restrictions: Some internet providers have limited or blocked P2P traffic due to its high bandwidth demand and its association with illegal activities in the past.

Quality of Service (QoS): Delivery depends on connections between users, which can result in inconsistencies and variations in streaming quality.

Availability of Peers: The effectiveness of P2P depends on having enough active users in the network sharing content in real time.

Security Concerns: Sharing data among peers raises concerns about content privacy and security, including risks of distributing malware or unauthorized content.

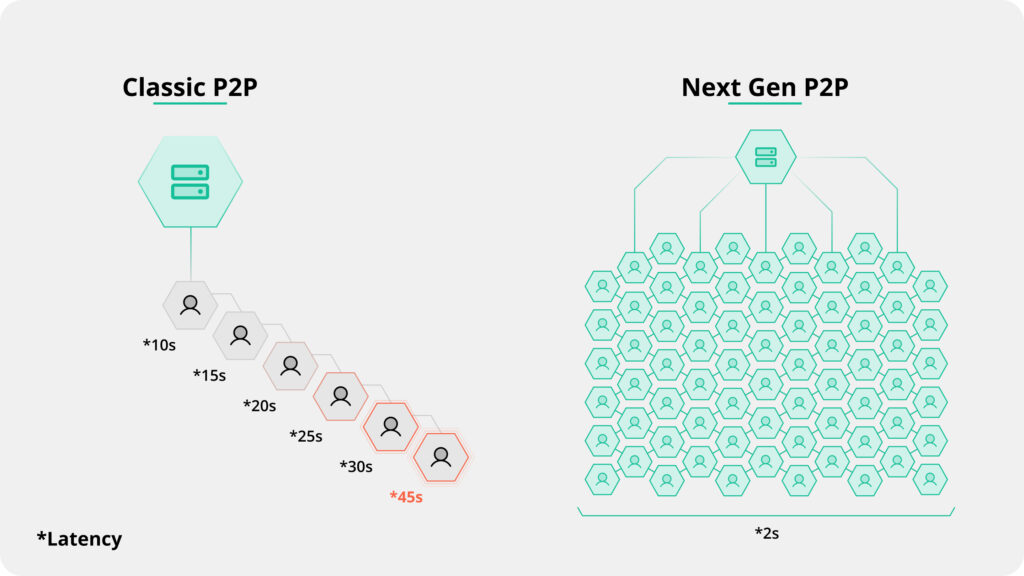

Next-Generation P2P Solutions

With recent innovations, next-generation P2P solutions have mitigated many of these problems. Technology is a tool that evolves; just as we don’t condemn the wheel because the first ones were stone and heavy, we strive to improve it.

Extensive research in universities and study centers has achieved algorithmic solutions to the problems this technology presented in the past. Essentially, there was something to highlight: total decentralization is an idea that computing theorists have flirted with since the beginning. The use of advanced algorithms optimizes node selection, ensuring better quality of service and greater security. Moreover, the use of protocols like WebRTC has allowed us to avoid the restrictions imposed by ISPs, making P2P a more viable option for modern platforms.

As in all science, technologies advance in parallel. Advances in DRM (Digital Rights Management) to encrypt content and new players that allow the incorporation of more efficient code have contributed to improving the user experience.

Considering this, the new generations of P2P, where companies like QUANTEEC are found, allow, for example, Ultra Low Latency, something unthinkable 20 years ago. The system is more robust, the changes and selection of peers are faster, and in the case of QUANTEEC, they integrate into their DNA the idea of calculating the energy saved by offloading servers.

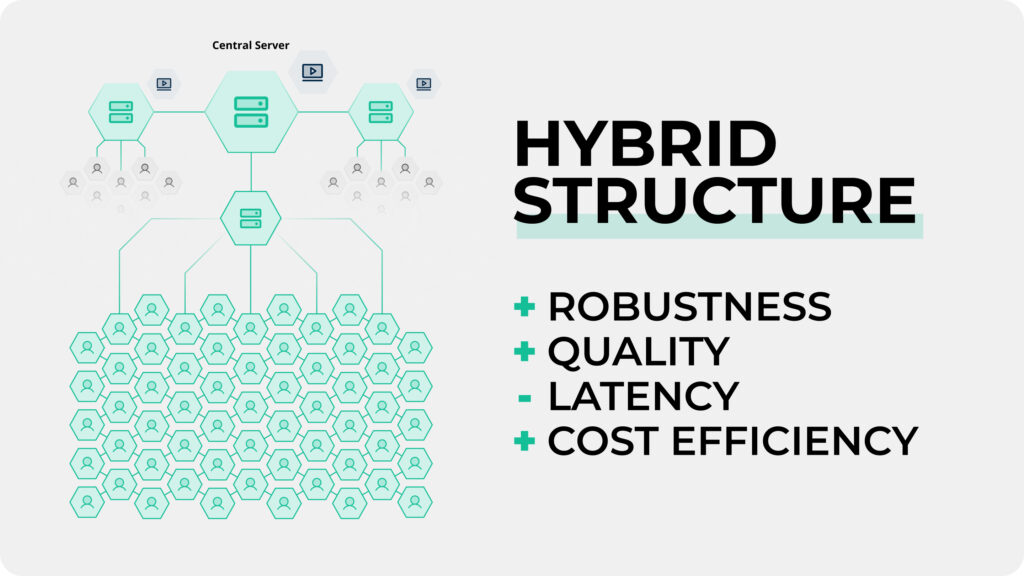

Hybrid CDN-P2P: The Robust Solution

What seemed like competition perhaps wasn’t so much, and maybe we’ve come to realize that it’s unnecessary to pit them against each other. As we mentioned earlier, P2P needs that server where everything begins. In the case of platform services, it’s the CDN that performs credential validation even before distribution starts; therefore, they are indispensable.

Instead of setting them in competition, there’s another approach: the hybrid CDN-P2P, which combines the strengths of both technologies. While the CDN ensures stable and reliable content delivery, P2P can take on additional load in high-demanding peak situations, such as live streams with thousands or millions of concurrent viewers.

This model reduces operational costs by decreasing exclusive reliance on expensive central servers and allows much greater scalability without the need to invest in additional infrastructure. Furthermore, it enhances system robustness, as distributing the load among users avoids outages due to unexpected audience peaks. This also entails a significant energy saving, as consumption in large data centers is reduced by leveraging the capacity of users’ devices.

There’s no need to create many more servers to meet the growing demand; instead, the existing infrastructure is efficiently utilized. In this way, a more consistent user experience is achieved, with shorter loading times and better streaming quality, even during peak times.

Edge Computing: A New Ally or a Threat?

This technology is very interesting because it’s at a midpoint and is what’s coming. Edge Computing is a distributed computing architecture where data processing is performed as close as possible to the point where it’s generated or consumed, that is, at the “edge” of the network. This means servers much closer to users, much less latency, and better quality of service.

Following this theory, while CDNs traditionally cache static content for quick delivery, Edge Computing allows applications to run and data to be processed in real time on devices or local servers. This is especially useful for applications requiring low latency and real-time processing, such as augmented reality, virtual reality, and online gaming.

Clearly, we are encountering a new type of servers and a new way of bringing content closer to the end-user, which is entirely compatible with what we’ve been discussing about combining technologies for the benefit of the consumer. Edge Computing can work together with hybrid CDN-P2P solutions to further improve efficiency and offer a superior user experience.

Web3: What’s Coming?

Web3 is coming, sooner rather than later. Decentralization is manifesting as a global trend that will gradually develop, with ideals that scholars catalog as based on privacy, user data ownership, transparency, and a totally decentralized network.

Web3 represents the next generation of the Internet, where applications and services will no longer be controlled by centralized entities but will operate on decentralized networks based on technologies like blockchain and advanced P2P protocols. This will allow us to create a fairer Internet, where users have greater control over their data and the ability to actively participate in the networks they use.

In the context of content distribution, Web3 could imply that users not only consume content but also contribute to its distribution and storage, being rewarded for it. This aligns with next-generation P2P solutions and the hybrid approach with CDN, paving the way for a more robust, scalable, and sustainable infrastructure.

Key Applications in Live Streaming

Live streaming is the ideal scenario for the hybrid approach and the integration of the mentioned technologies. During events with large audiences, such as sports matches, concerts, or global conferences, P2P can offload the pressure from central servers by distributing content among users. At the same time, the CDN ensures that users with less robust connections or in areas with fewer available peers continue to receive high-quality content.

Additionally, with the incorporation of Edge Computing, the experience can be further improved by processing data in real time near the user, reducing latency, and allowing smoother interactions. This is especially relevant in applications requiring precise synchronization, such as live betting, social media interactions during the event, or augmented reality experiences.

Conclusion

Instead of competing with each other, CDN and P2P can complement each other to offer more robust and efficient solutions. Platforms that implement a hybrid approach can guarantee faster, scalable, and cost-effective content delivery, prepared to meet the growing demand for live streaming and real-time experiences.

This way of viewing content distribution is highly compatible with current and future trends, addressing present challenges such as audience peaks, content proliferation, network bottlenecks, and increased interactivity with users who are far from being passive. By embracing environmentally friendly technologies, we not only enhance user experience but also take responsible steps toward a sustainable digital future.

Science and technology are not black or white; they are a spectrum of grays where new solutions are found to move forward. By combining the strengths of each technology and continuing to innovate, we can build a more robust, efficient, and eco-friendly internet infrastructure prepared for the future that awaits us with the arrival of Web3.